In the summer of 2020, online trash talking between FiveThirtyEight’s Nate Silver and The Economist’s G. Elliot Morris sparked the “Model Wars.” Now that the dust has settled, I’m comparing the Brier scores and the number of correctly called states from their final state-level model outputs on November 3, 2020.

Please note that the models didn’t have the same number of outputs. The Economist did not forecast Maine or Nebraska congressional districts, although FiveThirtyEight did (and correctly predicted four out of five). I’m only using the 50 states and Washington, D.C. in my comparisons.

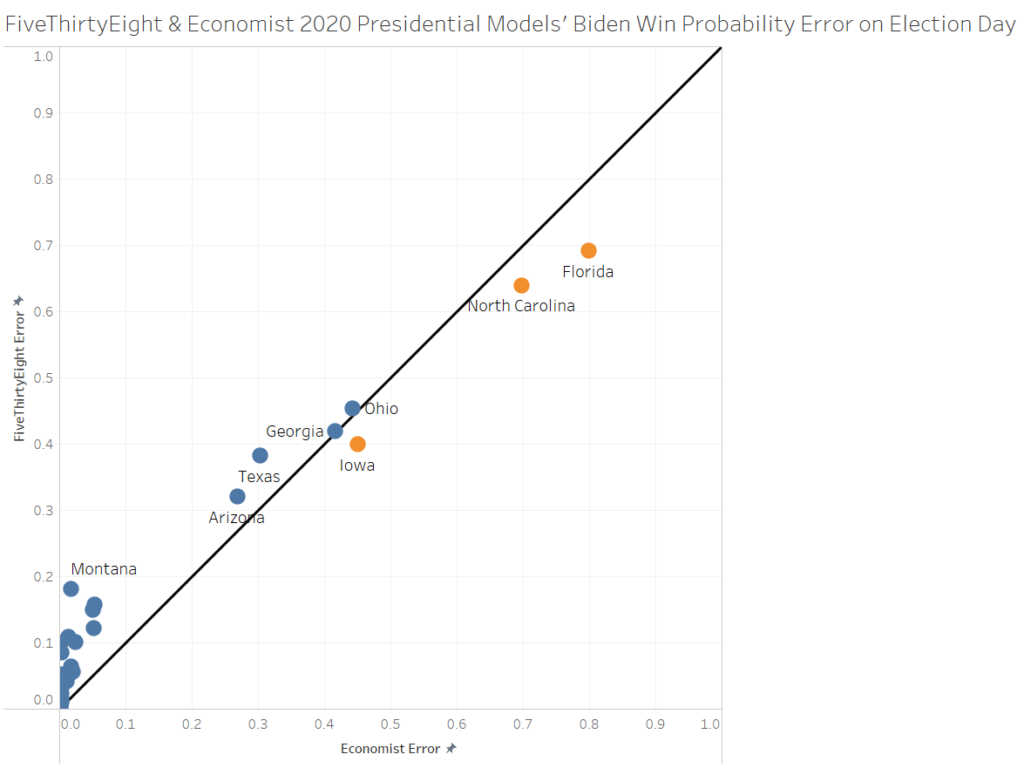

FiveThirtyEight had the lower (meaning better) Brier score, although both states correctly predicted the same states using a 0.5 threshold, with both incorrectly predicting Florida and North Carolina victories for Biden:

| Model | Brier score | State winner |

| Economist | 0.0368 | 49 / 51 |

| FiveThirtyEight | 0.0359 | 49 / 51 |

While FiveThirtyEight’s model was certain (model output was “1” or “0”) about the outcome in only Washington, D.C., The Economist’s model had certainty about the results in 20 states: Alabama, Arkansas, California, Connecticut, D.C., Delaware, Hawaii, Idaho, Kentucky, Maryland, Massachusetts, New Jersey, New York, North Dakota, Oklahoma, Rhode Island, Vermont, Washington, West Virginia, and Wyoming. Both The Economist and FiveThirtyEight models called all 20 of those state winners correctly.

If we remove those 20 “certain” states and look at the remaining 31 “swing” states, we find that the FiveThirtyEight’s model still had a lower Brier score:

| Model | Brier score | State winner |

| Economist | 0.0605 | 29 / 31 |

| FiveThirtyEight | 0.0590 | 29 / 31 |

The Economist had smaller error than FiveThirtyEight in 47 out of 51 predictions (both had no error in D.C.) FiveThirtyEight’s lower Brier score is driven by its more cautious predictions in Florida and North Carolina, the two states both models got wrong. If we removed those two states and look at predictions for Trump and Biden in the remaining 49, The Economist had a lower Brier Score than FiveThirtyEight (0.0153 to 0.0193).

The “state” with the closest probabilities was Washington, D.C. where both models gave Biden an identical win probability of 1. After D.C., the closest output was Delaware, where FiveThirtyEight’s Biden win probability was 0.99995 and The Economist was 1. Surprisingly, the state with the greatest difference in probabilities was a state where both models correctly predicted the outcome: Trump winning Montana. FiveThirtyEight gave Trump a 0.8197 probability of winning while The Economist model had Trump at 0.9827.

Other forecasts were just as accurate, if not more so than either FiveThirtyEight or The Economist: British bookmakers. If we take the implied probabilities of the bookmakers’ odds, we can compare them with both forecast models’ model outputs.

Sky Bet, Betfred, and Boylesports were the only three bookmakers the morning of Election Day on oddschecker.com that offered action for Trump and Biden in all 50 states (plus DC).

All three bookmakers had lower Brier scores than The Economist and FiveThirtyEight. Comparing state winners becomes a little tricky because bookmakers can have implied probabilities above 0.5 for both candidates. If we compare all 102 predictions (51 states x 2 candidates) then Boylesports and Sky Bet predicted more correct winners (99/102) while Boylesports had the same correct predictions (98/102) as the two presidential forecasts.

| Platform | Brier score | State winner |

| Economist | 0.0368 | 98 / 102 |

| FiveThirtyEight | 0.0359 | 98 / 102 |

| Betfred | 0.0346 | 98 / 102 |

| Boylesports | 0.0338 | 99 / 102 |

| Sky Bet | 0.0332 | 99 / 102 |

At the end of the day, it’s hard to crown a “winner” when all the models and implied probabilities were close together and highly accurate. Based on their Brier scores and predicted state winners, FiveThirtyEight was slightly more accurate than The Economist, while a few British bookmakers were slightly more accurate than FiveThirtyEight.