There has been some ridicule about prediction markets’ poor performance in the 2022 Senate elections, but the data for individual Senate races is less clear.

Below we look at the state-level forecasts for 2022 Senate races from the following sources:

- Three election models (FiveThirtyEight, The Economist, & DDHQ)

- Four betting platforms (William Hill, Coral, Betfred, & SBK)

- One betting exchange (Smarkets)

- Three prediction markets (PredictIt, Polymarket, & Insight Prediction)

- One forecasting platform (Metaculus)

These 12 platforms are being compared in two different ways:

- Accuracy at predicting the race winner using a threshold of 0.50 to classify forecasts as favoring a candidate to win or lose.

- Comparing Brier scores for probabilities of models and implied probabilities from betting platforms and prediction markets.

Prices, probabilities, and odds were captured on Election Day 2022 at around 10:30 a.m. from the various sites, with details for each platform at the end of this article.

PREDICTING WINNERS

Using a threshold of 0.50, every model and platform examined below missed Democrats holding Nevada while Metaculus was the only one to correctly call both Georgia and Pennsylvania. The Economist (barely) called Pennsylvania correctly while DDHQ (again, barely) correctly called Georgia.

FiveThirtyEight, the benchmark for election forecasting, missed the same three states (Georgia, Nevada, and Pennsylvania) that were missed by traders on PredictIt, Insight Prediction, Polymarket, and Smarkets, as well as gamblers on William Hill, SBK, Coral, and Betfred.

| Platform | States Predicted | States Correct | States Incorrect |

| FiveThirtyEight | 35 | 32 | GA, NV, PA |

| The Economist | 35 | 33 | GA, NV |

| DDHQ | 35 | 33 | NV, PA |

| William Hill | 16 | 13 | GA, NV, PA |

| Coral | 11 | 8 | GA, NV, PA |

| Betfred | 11 | 8 | GA, NV, PA |

| SBK | 14 | 11 | GA, NV, PA |

| Smarkets | 34 | 31 | GA, NV, PA |

| PredicitIt | 18 | 15 | GA, NV, PA |

| Polymarket | 14 | 11 | GA, NV, PA |

| Insight Prediction | 8 | 5 | GA, NV, PA |

| Metaculus | 6 | 5 | NV |

The implied probabilities for both parties in Arizona were above 0.50 on PredictIt and SBK. PredictIt had the Republican candidate at 0.505 and the Democratic candidate at 0.525, while SBK had the Republican at exactly 0.5 and the Democrat at 0.5625. We’re classifying both as correctly predicting a Democratic victory because the true probabilities would be above 0.50 for the Democratic candidate.

Even if we attributed Arizona as an incorrect prediction to PredictIt and SBK, there doesn’t seem to be evidence to claim that “all the prediction markets failed spectacularly.” Overall, prediction market traders and gamblers performed the same or slightly worse than the election models, while Metaculus users outperformed all three models.

BRIER SCORES

The Brier score is a scoring rule that measures the accuracy of probabilistic predictions.

The election outcome is quantified as either 1 (candidate won the Senate race) or 0 (candidate lost the Senate race). The error for each prediction is defined as the difference between the election outcome and the model’s probability (or prediction market or bookmaker’s implied probability) of that candidate winning. The simple average of all the squared errors makes up a model’s Brier score. Brier scores range from 0 to 1, and lower is better.

Looking at the Brier scores for the election models, betting platforms, prediction markets, betting exchange, and forecasting platform, we’re breaking down predictions by platform because of inconsistencies in which races were offered from market to market. And not every platform forecasted – or offered odds – for independent candidates such as Evan McMullin in Utah.

The six senate races Metaculus users forecasted were AZ, GA, NV, OH, PA, and WI. Comparing Brier scores for those six forecasts, we see that Metaculus users were the most accurate while PredictIt was essentially a coin flip:

| Platform | Brier score |

| Metaculus | 0.1658 |

| The Economist | 0.1761 |

| DDHQ | 0.1812 |

| FiveThirtyEight | 0.1930 |

| Coral | 0.2214 |

| Insight | 0.2267 |

| William Hill | 0.2277 |

| Smarkets | 0.2316 |

| Polymarket | 0.2356 |

| SBK | 0.2415 |

| Betfred | 0.2422 |

| PredicitIt | 0.2513 |

Comparing the 16 senate races that the William Hill bookmaker offered action, we see that gamblers did worse than the models:

| Platform | Brier score |

| The Economist | 0.0713 |

| FiveThirtyEight | 0.0802 |

| DDQH | 0.0815 |

| Smarkets | 0.0948 |

| William_Hill | 0.0949 |

Comparing PredictIt and Smarkets in the 18 Senate races that PredictIt offered, we see that American gamblers once again performed worse than British gamblers:

| Platform | Brier score |

| Smarkets | 0.0823 |

| PredictIt | 0.0924 |

To compare Smarkets with the three election models, we lose both parties in CA and Democrats in ND (Smarkets had no bets for all three) as well as lose all independents, which means Evan McMullin’s chances aren’t factored here. In the remaining 66 predictions over 34 races:

| Platform | Brier score |

| The Economist | 0.0346 |

| FiveThirtyEight | 0.0389 |

| DDHQ | 0.0396 |

| Smarkets | 0.0463 |

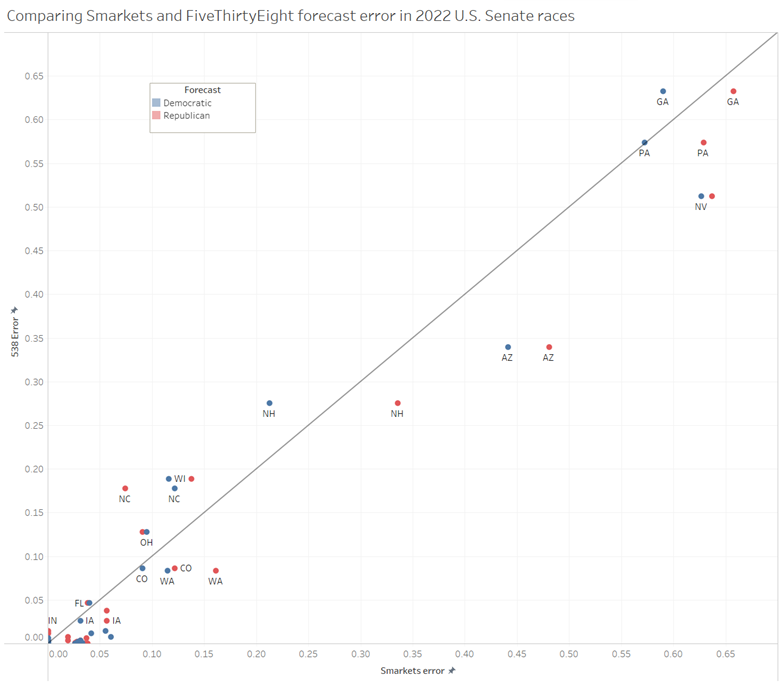

The models earned lower Brier scores than traders and gamblers because of the certainty of their predictions, not necessarily because they correctly predicted more winners. For example, Smarkets and FiveThirtyEight missed the same three senate races using a 0.5 threshold, but FiveThirtyEight had a lower Brier score because the model was “more certain” in the correct direction more often than Smarkets’ bettors. Of the 66 overlapping predictions, FiveThirtyEight had a smaller forecast error than Smarkets in 39. In the six “wrong” predictions that created relatively large errors for both, FiveThirtyEight had a smaller error than Smarkets in five. Below is a plot of the respective errors for both platforms:

We previously compared Brier scores among the three election models in all 35 U.S. Senate races.

Smarkets & SBK

Smarkets is a British online betting exchange that allows peer-to-peer wagers on sports and politics. SBK is a British sports betting app created by Smarkets, and both share the same political betting guru, Matthew Shaddick. Probabilities from Smarkets were taken directly from the platform, while odds from SBK were retrieved from oddschecker.com and converted into implied probabilities.

William Hill, Coral, & Betfred

William Hill, Coral, and Betfred are three established British bookmakers. Political gambling is legal in England and the British love betting on American politics. Odds for all three were taken from oddschecker.com and converted into implied probabilities.

PredictIt

PredictIt is a political prediction market run by the Victoria University of Wellington, New Zealand, and Aristotle International of Washington, DC. PredictIt was ordered by the Commodity Futures Trading Commission to end operations by February 15, 2023. The following formula was used to find an estimated price for one individual contract from the four listed prices:

(bestBuyYesCost + (1-bestBuyNoCost) + BestSellYesCost + (1- BestSellNoCost)) / 4

For certain PredictIt contracts where there was a zero value for “BestBuyNoPrice” (Alaska DEM, Alaska IND, Oregon IND, Utah DEM, Vermont IND) or “BestBuyYesPrice (Alaska REP and Missouri REP), we’re assigning a value of 1.

Polymarket

Polymarket is a decentralized prediction market built on the Polygon blockchain. Traders buy and sell using a stablecoin pinned to the U.S. dollar (“USD Coin”). Polymarket was forced to shutter its U.S. operations in January 2022, but trading is open to persons outside the United States. Prices were taken from the Polymarket Whales website.

Insight Prediction

Insight Prediction is a new prediction market founded by Douglas Campbell. US persons are currently excluded from trading on Insight Prediction. Prices used are the “latest yes price” and Insight prices go to the thousandth place.

Metaculus

Metaculus is a forecasting platform (not a ‘prediction market’) that aggregates predictions by encouraging users to compete in predicting probabilities on certain questions of “global importance.” No money is charged or exchanged, although certain contests have cash prizes. Participation is typically low, although it was still the only platform to correctly forecast Democrats winning in both Pennsylvania and Georgia. The probability used is the median unweighted community prediction for each market.

For further reading about the performance of prediction markets in the 2022 senate races, check out Maxim Lott’s comparison of FiveThirtyEight with ElectionBettingOdds.com.